- Home

- Forex Articles

- Metatrader Backtesting

The Wrong Way to Test & Optimize

Forex Trading Strategies

Using MetaTrader

Rob Casey in Module 4 of his Metatrader Back Testing & Optimization Course explains that "one of the biggest mistakes new traders make when back testing their robots is to take "all" of the historical data they have and run a single test over the entire set of data. So if have 4 years of history then they would run a 4 year back test. If the results are good they move into live trading with those settings. If the results are bad they pick new settings and run a new test over the full 4 years. Then they repeat this process until they find settings that seem to work well for those 4 years".

The course goes on to explain that ... "for many the process just

described constitutes their idea of back testing and optimization. The

reason people often test and optimize using all of their data in this

way is because they’ve heard that it’s important to see how well the

robot would have done in a variety of market conditions. Since market

conditions are always changing it stands to reason that testing over the

longest period of time possible is the best way to test the robot in

all market conditions. Unfortunately testing the robot over the whole

history in one long test is absolutely the wrong thing to do and can

lead you to trade an over-optimized robot without even knowing it.

There

are two really big problems with this approach that we’re going to talk

about in the section titled "How To Optimize A Forex Robot". But first,

to drive the point home it might be instructive to have a look at an

Over Optimization Example.

An Over Optimization Example

Most

of the sales pages for any forex robot or expert advisor will show

"extreme over-optimization" examples. However, you will never know they

are over-optimized because you do not have their settings.

Over-optimization occurs by focusing your optimization on one time frame

say 1/1/2009 to 12/31/2009 and run hundreds or thousands of tests to

see which settings would have yielded the greatest results on historical

data.

Instead of testing those particular settings on other

years and finding the best overall settings that work each year to some

degree, you just take your curve fitted settings and attempt to use them

on a live account. What’s happening here is you are basically cherry

picking your trades because you already know what the market was like on

the data you are backtesting. This all sounds great until your forward

test starts and the results are drastically different ...

Now

what most people do is take these results, grab the most profitable

settings, and then run with those in their live account. This is one

reason people end up completely decimating their accounts.

Most

people simply run the test on all available data, in this case 1/1/2006

to 12/31/2007 and optimize the settings for that data. The problem is

that you are still over-optimizing, just on a larger amount of data.

Simply saying to MT4 "what settings would have filtered out most of the

bad trades and given me the most profit"?

Instead, by using

our methods of robot optimization you are going to give yourself the

absolute best chances of avoiding over-optimization while still

maximizing your gains.

The solution for the right way to optimize is;

Walk Forward Optimization and Testing

Walk

forward testing as described here is largely based on the pioneering

work of Robert Pardo and has become well accepted approach among top

professionals of optimizing and testing of automated trading systems.

It

is essentially a form of iterative in-sample optimization followed by

out-of-sample verification testing that ensures the optimization is

based on the most recent market conditions while at the same time

demonstrates that the robot is optimizable for various different market

conditions.

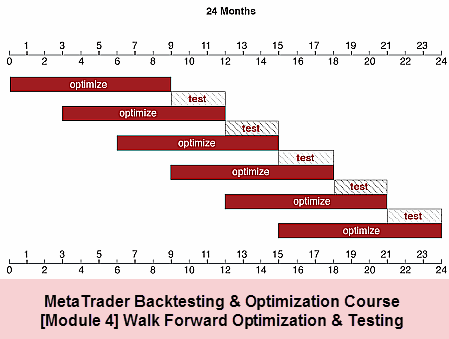

Walk Forward Example

Imagine we have 24 months of data (tick data or M1 data). Instead of running a back test and optimizing over the entire 24 months we break the 24 months up into segments as shown in the diagram below.

We start at the very beginning of our 24 months of data and run an in-sample optimization for 9 months. Then we perform an out-of-sample test of the optimal settings over the "next" 3 months. Then keep retesting another 5 times as shown in the diagram above, taking into account the results each time.

Go back to Rob Casey's article, click here.

How Do You Feel About This Topic?

What are your thoughts about this? Share it!